Microservices has emerged as a preferred architectural model for large cloud hosted applications. One of the design patterns in the microservices architecture is an API Gateway, that acts as an abstraction layer to isolate and consolidate backend microservices into use-case specific front end services for client applications. This paper explores the requirements of an API Gateway and evaluates some technology options for implementing it. It also includes an example application that was used to evaluate the technology options to compare results of an informal benchmark test to validate the performance of the technology options so as to arrive at a recommendation.

Microservices Architecture

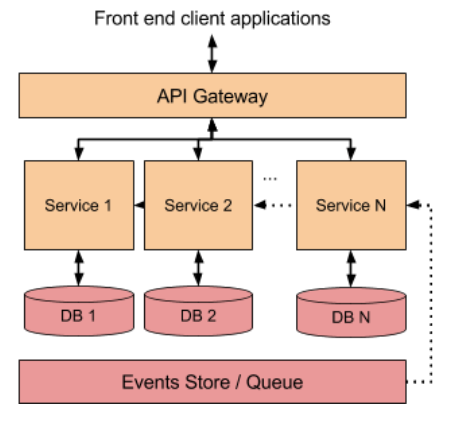

Large cloud-hosted applications are rapidly moving away from legacy a monolithic architecture to a loosely coupled set of independently deployable microservices. The canonical microservices architecture consists of:

- API Gateway - a component that isolates and consolidates backend microservices into use-case specific front end services for client applications

- Microservices - independently deployed applications that handle self-contained services that typically implement an entire vertical including business logic as well as associated database

- Event Store / Queu - a component that records events that represent all significant events in the application and helps to synchronize and operations between multiple microservices. The following diagram represents such a canonical microservices architecture:

A Sample Microservices Application

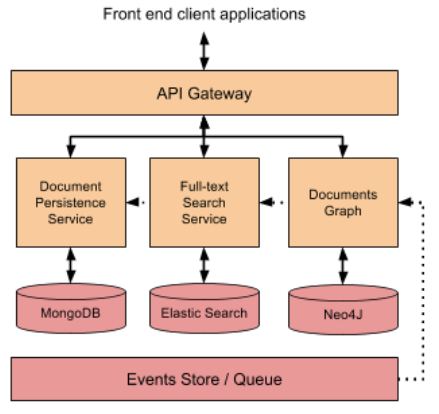

We will use an example of a microservices based Data Processing Application as a reference, so that we can use it to illustrate actual performance of an API Gateway. A simplified architecture of the Data Processing Application is illustrated below:

Let us consider two types of operations that this application handled:

- Batch operation : for ingestion and exporting of multiple documents

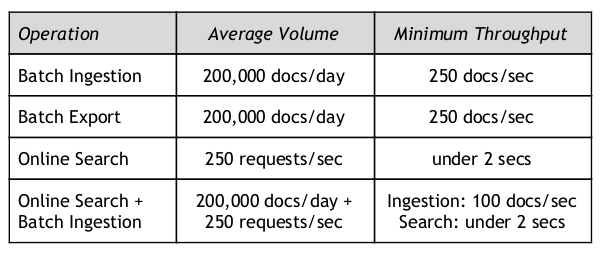

- Online operation : for upload and search for individual documents The performance levels required for these operations on a specified server configuration were as follows:

Role Of An API Gateway

The API Gateway addresses various issues that arise out of the fine-grained services that Microservices applications expose.

- The granularity of APIs provided by microservices is often different than what a client needs. Microservices typically provide fine-grained APIs, which means that clients need to interact with multiple services. The API Gateway can combine these multiple fine-grained services into a single combined API that clients can use, thereby simplifying the client application and improving performance.

- Different clients need different data. For example, the desktop browser version of a product details page desktop is typically more elaborate than the mobile version. The API Gateway can define client specific APIs that contain different level of details, thereby making it easy for different clients to use the same backend microservices with optimal level of data exchange.

- Network performance is different for different types of clients. For example, a mobile network is typically much slower and has much higher latency than a non-mobile network. And, of course, any WAN is much slower than a LAN. The API Gateway can define device specific APIs that reduce the number of calls required to be made over slower WAN or mobile networks. The API Gateway being a server-side application makes it more efficient to make multiple calls to backend services over LAN.

- The number of service instances and their locations (host+port) changes dynamically. The API Gateway can incorporate these backend changes without requiring frontend client applications from determining backend service locations.

- Partitioning into services can change over time and should be hidden from clients. The API Gateway insulates clients from such internal changes in the service partitioning.

- Different clients may need different levels of security. For example, external applications may need a higher level of security to access the same APIs that internal applications may access without the additional security layer. The API Gateway can provide this additional level of security that verifies the authority of various types of client applications.

API Gateway Technical Requirements

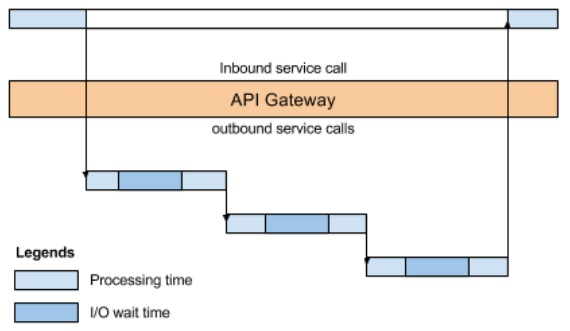

The API Gateway thus either acts as a proxy or router to the appropriate backend service, or provides a combined front end service by fanning out to multiple backend services. As a result, the API Gateway needs to be able to handle a large number of simultaneous incoming and outgoing service calls. Therefore, it needs to optimally utilize its resources - CPU and RAM primarily - for each request, so as to be able to handle the maximum number of concurrent service calls. For all service calls involve network I/O requests, each service call will include some I/O wait time that is inversely proportional to the network speed. The following diagram illustrates a single inbound service request that involves executing three sequential outbound service requests.

As seen from this diagram, the execution of each inbound service call involves at least one outbound service call, and each outbound service call involves I/O wait time till the service responds with a result.

Technology Platform Options

We considered two options for the technology platform to implement the API Gateway:

- Java E - considering that many of the backend services were implemented in Java EE, we considered implementing the API Gateway using the same platform

- NodeJ - was also considered due to it’s capability to handle asynchronous, non-blocking I/O

Note: There are also JVM based non-blocking I/O (NIO) platforms such as Netty, Vertx, Spring Reactor, or JBoss Undertow. However these were not considered in this evaluation.

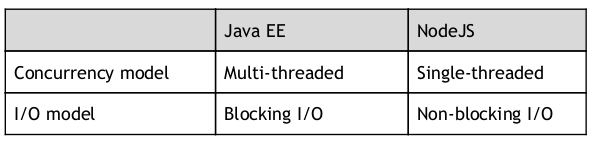

The most important differences between NodeJS and Java are the concurrency and I/O models. Java uses multi-threaded synchronous, blocking I/O while NodeJS uses single threaded asynchronous, non-blocking I/O.

Concurrency Models

The following diagram illustrates the difference between the two concurrent execution models.

In a multi-threaded environment, multiple requests can be concurrently executed, while in a single-thread environment multiple requests are sequentially executed. Of course, the multi-threaded environment utilizes more resources. It may seem obvious that a multi-threaded environment will be able to handle more requests per second than the single-threaded environment. This is generally true for requests that are compute intensive and utilize the allocated resources extensively. However, in cases such as the API Gateway, where requests involve a lot of I/O wait times, the allocated CPU and memory resources are not utilized during this wait time.

I/O Models

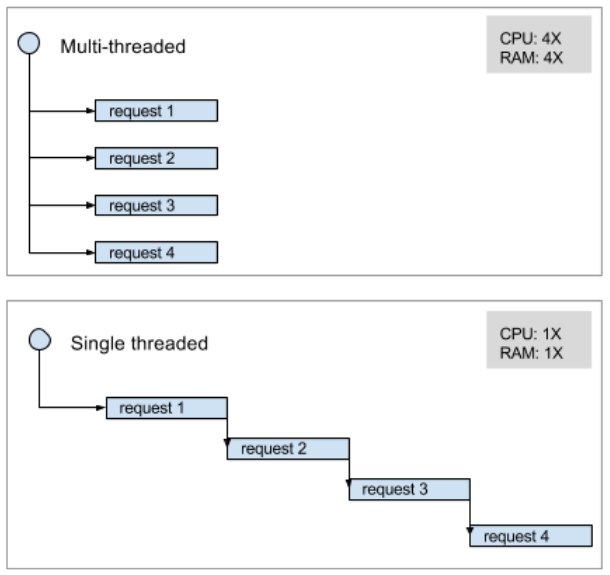

The following diagrams illustrate a scenario where each request involves I/O wait times, in multi-threaded, blocking I/O v/s single threaded non-blocking I/O.

From this diagram it is clear that though the single threaded model takes longer to process multiple requests, the delay is not as much as the previous requests that did not involve I/O wait times. It is therefore very likely that when a large number of concurrent requests are to be handled, the single-threaded, non-blocking I/O model will give better throughput than the multi-threaded, blocking I/O model.

Benchmark Test Results

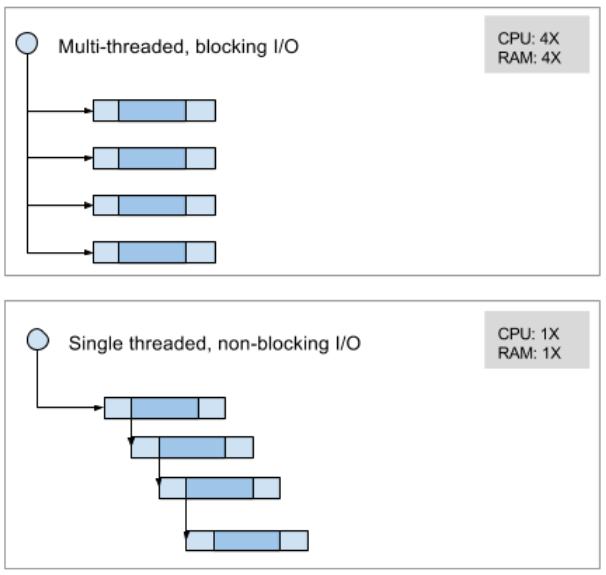

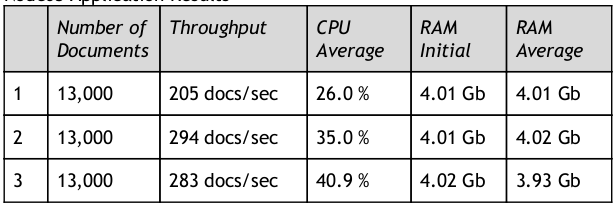

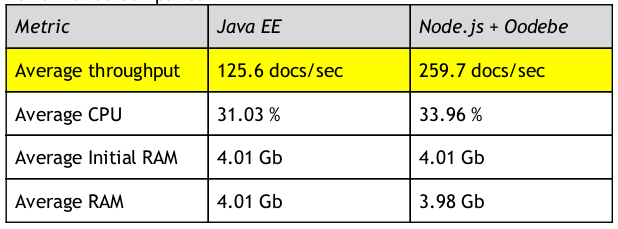

To test the performance of the two platforms, we implemented a sample API gateway that simulated the three backend service calls using timed mock services so that we could control the I/O wait state to a predictable level based on actual expected time taken by each of the backend microservices. We tested a single inbound API for ingestion of batch documents in the two implementations - one using the existing Java EE platform and one using NodeJS. Then we tested the performance of both these applications by running a series of tests for ingestion of the same set of documents. The tests were conducted on the same m4.xlarge EC2 instance on Amazon Web Services with 4 vCPUs and 16 GiB memory. The CPU and RAM utilization was monitored using Amazon CloudWatch. The results were as follows:

JAVA APPLICATION RESULTS

NODE JS APPLICATION RESULTS

PERFORMANCE COMPARISON

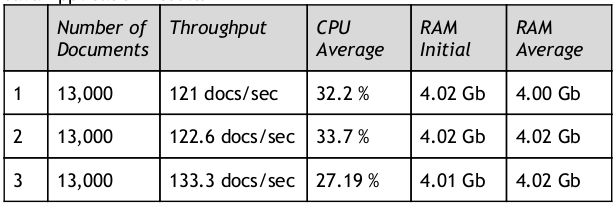

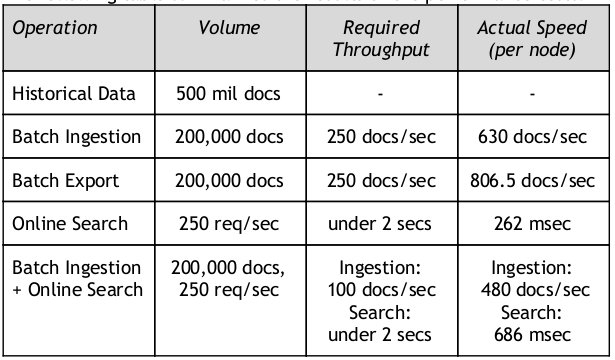

The results validated our expectation that the single-threaded, non-blocking I/O model of NodeJS would provide better throughput per server instance than the multi-threaded, blocking I/O model in Java. The final implementation of the Data Processing Engine was deployed using NodeJS and extensive performance tests were carried out on a clustered setup with two DPE nodes.

The following table summarizes the results of the performance tests:

Conclusions

Based on the analysis of the API Gateway requirements and benchmark tests, we recommend using a non-blocking I/O platform such as NodeJS to implement the API Gateway. The tests conducted in this study did not evaluate other non-blocking I/O platforms. Further, subsequent to this study, there have been success stories reported of implementing API Gateways with JVM based platforms such as Netflix’s Hystrix. However, these have not yet been benchmarked by Accion Innovation Center to date.